Lexical Analysis¶

Lexical analysis [] relates structure to meaning. Structures as input to lexical analysis consist of distinguishable signalling elements. Cave paintings used pictures of creatures and tools as signalling elements. In Scripts letters play the role as signalling elements. The signalling elements of DNA are the nucleotide basis Adenin, Guanin, Cytosin, Thymin, and Uracil (A, G, C, T, and U). Lexical analysis is the first step in the process of interpretation. It detects signalling elements in structures and associates them with atomic chunks of meaning.

|

|

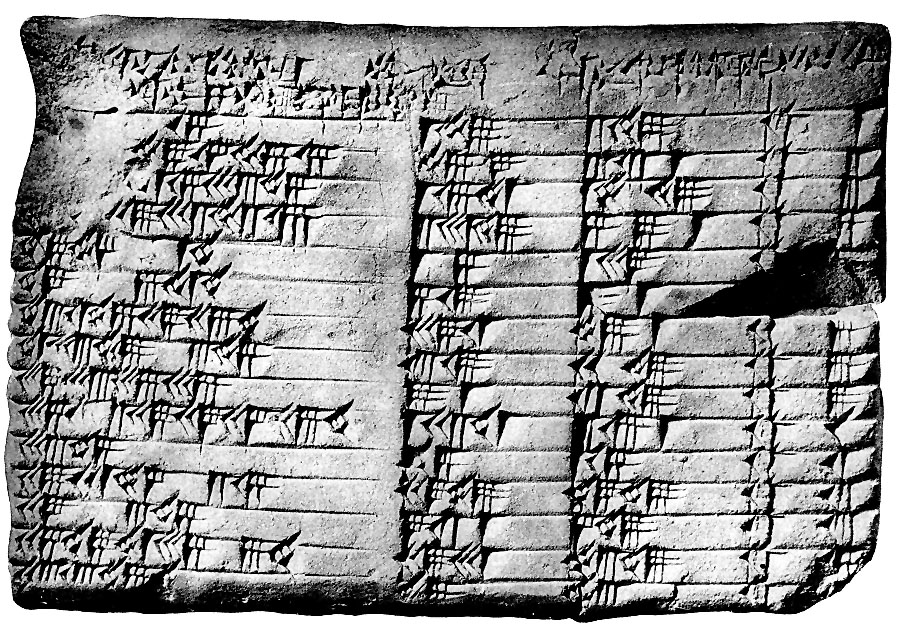

Fig. 1 Examples of lexical structures with signalling elements: a) Binary representation of ‘hello world’. b) Plimpton 322, Babylonian script listing pythagorean triples [1]. c) DNA double helix [2].¶

Information is what informs []. It contains knowledge, to be observed by a conscious observer, or data to be processed. The information content, i.e. the entropy [], is only different from zero, if it is present at its author and lacks at its reader. Knowledge, in contrast to information, is not zero if it is present in both, author and reader. Information is the difference between the knowledge divulged by an author and the knowledge already known by a reader.

Information transfer over space and time is called communication. To communicate a physical substance is modelled by an author and transported to its reader. Examples of physical substance are electromagnetic waves, sounds of speech, gestures, pictures, letters on paper, or files on a hard drive Communication is the basis for cooperation of groups of organisms or components, such as bees in a beehive, an ant colony, human society, or autonomous robots. Consequently, the design of effective lexical structures and the implementation of lexical analysis is crucial for any kind of advancement to be achieved by cooperating entities.

In the history of human civilization the introduction of new more efficient writing systems enacted dramatic turning points in cultural development []. A crucial role in the efficiency of a writing system is the size of the alphabet. A reduced set of symbols facilitates learning and supports distinctness. First writing systems [] were pictographic. They may have been used for bookkeeping in agriculture to compensate human forgetfulness. Quickly writing became the pillar of efficient economics and administration. Pictures convey information relying on the reader’s intuition [7]. However, for communication to happen, author and reader must share a cultural context [8]. Further, the space of describable things is restricted by the set of used pictures. Later proto-writing systems [] used symbols to convey specific information.

Logographic systems, such as Hieroglyphs associate symbols with a grammatical word. They were used to record customs and living conditions. When humans started to record history, this actually marked the line between prehistoric and historic times. From now on, writing systems [] require knowledge of the language being used. However, the range of describable things extends beyond the set of known objects. Newly invented concepts may be associated with new words relying on known objects and their relations in a formal and distinct manner. The range of possible statements even exceeds what is imaginable.

Phonetic writing and alphabetic systems, further reduced the set of applied symbols. They resulted in achievements such as medicine, philosophy, legal code, and mathematics. First wide access to education only occurred in cultures that adopted small enough alphabets to be learned in a reasonable amount of time. The application of digital circuits in the last half of the 20th century sparked an unprecedented propagation of science and technology. The very basis of operations in digital circuits is an alphabet consisting only of ‘0’ and ‘1’. An overview over some writing systems in history is shown in Table 1.

System |

Type |

Year |

Char. Number |

|---|---|---|---|

DNA |

nucletoid |

4 109 BCE |

4 |

Cave paintings |

proto-writing |

40000 BCE |

unknown |

Cuneiform |

pictographic |

3200 BCE |

approx. 1500 |

Egypt. Hieroglyphs |

logographic[3] |

2800 BCE |

approx. 1000 |

Paleo-Hebrew |

phonetic |

1000 BCE |

22 |

Latin |

alphabetic |

700 BCE |

26 |

Nabatean-Arabic |

phonetic |

600 BCE |

22 |

Maya Hieroglyphs |

logographic |

300 BCE |

approx. 700 |

Devanagari |

syllabic |

100-400 CE |

47 |

Binary Code |

digital |

approx. 1900 |

2 |

Klingon[4] |

alphabetic |

1979 |

26 |

Lexical structures are also found in nature. With the discovery of nuclein in the 1860’s by Friedrich Miescher [], and the discovery of the double helical structure of DNA molecules by Rosalind Franklin, James Watson, and Francis Crick in the 1950’s [], it has become evident that the genetic code for protein constructions in living organisms, is indeed encoded in a sequential data stream. The code uses an alphabet of only four nucleotide basis, namely A (adenine), C (cytosine), G (guanine), and T (thymine) [] []. Understanding the genetic code has become a field of its own, namely bioinformatics [].

In lexical structures, meaning is carried by patterns and the location where they occur.

Consider the sequence 30 apples: $5. The number 30 instantiates the

pattern number and indicates the amount of something given by a category

pattern specified as apples. The colon associates the amount with what

follows. Here 30 apples are related to the price in terms of a number 5 of the currency symbol

$. That is, it conveys the message: “30 apples cost five dollars”. This

meaning is only conveyed, if the patterns occur in that order. If the order is

changed, the meaning may either completely diverge (e.g. 5 apples: $30), or

it may loose its interpretability (e.g. 30:5 $apples).

Location can only exists in an sortable structure. Vice versa, a set is sortable, if for each element there exists a procedure to determine its distinct location []. From all possible ways to order elements, the linear line-up is the most elementary. It solely requires the notion of a starting element and a procedure to determine successor for a given element. This parallels the natural numbers in mathematics (start=0, successor=current+1). Consequently, it comes natural to associated a natural number with a position in a linear line-up. The ability to express position distinctly with a single number and the minimalistic description of its sorting order make linear data sequences the prime candidate for the design of lexical structures.

State Machines¶

Fig. 2 shows a state machine representing the slightly idealized daily life of a student. His states are ‘study’, ‘eat’, and ‘sleep’ as they are shown as names framed by circles. Arrows between the states signify transitions between those states which are triggered by a finite set of events. Such events are specified as annotations to the arrows, namely: the student feeling hunger, fatigue, satiety, and an alarm clock that buzz-es. An interpretation goes as follows. Assume, the student is in state sleep. When the alarm clock buzz-es, he enters the state study. He continues until hunger emerges, or he experiences fatigue. The first event causes him to eat the latter causes him to sleep, etc.

Fig. 2 Description of a student’s life in terms of a state machine.¶

A state machine is defined by of a set of states, an explicit initial state, a finite set of events, transition rules, and actions that are applied upon transitions []. A state in the state machine can be either active or inactive indicating its ability to react to incoming events. A state machine exhibits behavior by activation and deactivation of states as reaction to events specified as ‘transition map’.

- Transition Map:

A transition map of an active state determines what event causes what subsequent states to become active in favor of the active state.

A finite state machine (FSM) [], there is only one state active at a time. It is called the current state. This implies that there is no transition on the ‘no event’ (the so called ε-transition []) and transition maps associate an event with a distinct successor state. Quex generates FSMs [5].

Finite state machines handle events at discrete times, i.e. sequentially. The current state is the deterministic result of the initial state and a specific event sequence which preceded. As such, the activation of a state coincides with the detection of a specific pattern in a sequential stream of letters. Since the set of possibly occurring letters is a closed set, namely the alphabet, letters may play the role of events in the FSM. In the graphical display of a state machine, letter sequences correspond to paths along the states which they activate. Deterministic finite automatons (DFAs) [] are special state machines for pattern detection. They mark states that signal a pattern match in the input stream by labelling them as acceptance states.

Fig. 3 Pattern matching deterministic finite automaton.¶

Fig. 3 displays a DFA detecting

the words nice and new. A double circle indicates an acceptance state.

If the letters n, e, w occur when the state machine is in the

initial state 0, the state sequence 1, 5, and 6 is passed. State 6 is an

acceptance state indicating that the pattern matched. Similarly, the

n, i, c, e guides through 1, 2, 3, 4, where the last state

indicates a match. However, a deviating sequence such as n, i, p

drops out in state 2, because there is no transition on p. The sequence is

a mismatch. For the sake of precise discussions let the terms ‘lexatom’ and

‘lexeme’ be defined as below.

- Lexatom:

A lexatom is one instance from a finite set of elements (the ‘alphabet’) in data sequence. A lexatom triggers exactly one state transition, or failure.

Quex introduces the term lexatom to distinguish the concept from closely related terms such as letter, character, event, or code unit. It is discussed in detail in later sections.

- Lexeme:

A lexeme [6] is a finite sequence of lexatoms.

The term pattern and match can be given a precise definition.

- Pattern:

A pattern circumscribes a set of lexemes. It is represented as a configuration of a DFA where only those lexatom sequences reach an acceptance state which belong to the pattern’s set of lexemes.

- Match:

A match is the incidence of a DFA reaching an acceptance state. The sequence of lexatoms that triggered the transitions from initial state to acceptance state constitute the matching lexeme.

From here, the lexical analysis step and lexical analysis can be defined with respect to the underlying mechanics.

- Lexical analysis step:

A lexical analysis step starts with the attemp to match a set of patterns at a specific input position in the input stream. Once it is determined which pattern matches, or which matches best, a reaction according to the ‘winning’ pattern is executed. Eventually, the input position is set to where the next matching shall start.

- Lexical analysis:

Lexical analysis is a sequence of lexical analysis steps.

Pattern matching itself is deterministic, since it is implemented as state transitions of a DFA. If it can be assumed, that the reactions to pattern matching do not influence the input stream, and that the subsequent setting of the input position is deterministic, then a lexical analysis step is deterministic. By induction, with this approach of lexical analysis, the token stream is determined distinctly by a given input stream. This has an import impact: it enables precise interpretation, which requires a distinct relation between information and its representation [].

With a basic understanding of lexical structures and their role in history and nature, the following sections provide with a detailed view on the actual process of lexical analysis.

Footnotes